Technical interviewing is profoundly broken. The problem isn’t constrained to either the job seeking or the job hiring side. For those looking for a job it frequently boils down to little more than an algorithm lottery on how quickly you’ll spot the “ah ha” moment. On the hiring side it amounts to a mountain of weak signals and going through interview wrap ups with a lot of “I’m just not sure.” Luck is a considerable factor on both sides. The result is a tremendous amount of energy on all sides being wasted. Why is this the case though? Largely it’s a combination of ossified bad practices, lack of defining the skills we actually need, and a misunderstanding of what problem solving really is. This is about the concrete steps of interviewing, but that being said, we must take a large detour through the realm of problem solving to make it sufficiently coherent and relevant. Also, this is not about weeding out negative candidates faster- it’s about reducing false-negatives (people you should have hired but didn’t) and false-positives (people you did hire but shouldn’t have) through improved signaling and reduced guessing. Lastly, behavioral interviewing is also very important, but not the focus here.

tl;dr – Abstract

Ditch the whiteboard unless the candidate is drawing a block diagram to further a discussion. Clearly delineate the skills someone would need to succeed in the role. If candidates are leaving the interview and you feel like you’ve got weak signals, you’re asking useless questions or don’t understand the role. If the role involves coding and problem solving: laptop + dynamic problem solving is the only way to go.

So you need to hire someone – Distilling need

Let’s start at the top. I believe it is a fair statement to say we hire because there’s work to be done and not enough people to do it. Great. Since you have at least some rudimentary backlog of work and a future direction, you should have an understanding of what knowledge, skills, and competencies a person should have to achieve success in the role. Without this guiding light you have nothing to measure a candidate against.

If you’re hiring a developer, what kind of developer do you need? What degree of seniority? All too often companies implement a one-size-fits-all technical interview that applies the same rubric to a new college graduate, a self- taught individual, or someone with 15+ years of experience. This is a nonsense- the level of experience of the candidate will change the interview considerably. In order to calibrate these changes, we need to solidly know what it is we expect at different levels.

I chose my earlier words very carefully: knowledge, skills, and competencies. These are the paraphrased definitions from the Centre for European Research on Employment and Human Resources

- Knowledge is the information we acquired in the world. The combination of our capacity to learn and the opportunity to learn. It’s the background base level of information we need to perform a job. This is knowing a programming language, Photoshop is an image editor, or that DNS runs on port 53.

- Skills are the specializations we acquire from experience when our knowledge intersects the opportunity to apply our knowledge. Applying programming to build systems, being able to paint in Photoshop, being able to diagnose DNS problems are all skills.

- Competence is a more thorny one. The literature offers a less concise definition but offers a context-dependent: ‘characteristic of an individual that has been shown to drive superior job performance’ (Hartle 1995). It also frequently includes the different combinations of skills and their necessary level, alongside with the personality characteristics to achieve success. These are principally sussed out in behavioral interviews and thus not a key focus here.

The key advantage of having a framework of capabilities and requirements is that we can now go step-by-step through an interview process and verify we are measuring the things we need clearly. At any given step we should clearly be able to articulate the knowledge or skill being scrutinized and why it’s being measured.

With actual job requirements in hand, let’s talk about the big three of bad signaling: whiteboard problem solving, whiteboard coding, and architectural medley.

Whiteboard Problem Solving and Why It’s Worthless

The most odious first. I don’t know anyone that feels this particular whiteboard segment of a technical interview is worth anyone’s time. Yet they are ubiquitous. For the interviewer it’s usually boring and routine, and for the interviewee it’s usually little more than a game of chance. If you’re not familiar it usually goes like this:

“Ok. This is going to be the problem solving part of the interview. I’m going to give you a problem and I want you to think about it, come up with a solution, and then write some code to actually do it. Here’s the first step: consider a list of integers. They might be positive or negative. How can you most efficiently determine that any three of them sum to zero?”

Assuming you get the first one right (they’re almost always broken into an ‘easy’ step and ‘hard’ step).

“Ok, great. How about this: given the same list of integers, can you determine the greatest monotonic subsequence? So if you have 1, 10, 2, 3, 6, 4, 5. You would pick 1, 2, 3, 4, 5.”

Take a look at your list of knowledge, skills, and competencies. Which of the three is this actually measuring? This segment will almost certainly be said to assess one ‘s “problem solving ability” or “CS fundamentals.” In practice, this is largely inaccurate and amounts to little more than guessing. Real world problem solving is an order of magnitude more difficult, and as far as CS fundamentals go it’s just seeing if you dusted off your algorithm book from your college program. I will more rigorously demonstrate why this is not useful problem solving, but I’m going to let this quote from Lazlo Block, SVP of People at Google, from a 2013 New York Times interview say it first:

“On the hiring side, we found that brainteasers are a complete waste of time. How many golf balls can you fit into an airplane? How many gas stations in Manhattan? A complete waste of time. They don’t predict anything. They serve primarily to make the interviewer feel smart.”

I argue that the previous two problems also solidly fall in this category. How many times have I needed a way to check if three integers sum to zero? None. How many times in my career have I needed a monotonic subsequence of integers? None. How many times have I needed a combinatoric solution to anything? Very, very few. Surely though Google finds these useful since they ‘ve done a solid job of popularizing the algorithms/problem-solving approach? Surely in all their interviews they found something diagnostic?Lazlo Block again:

“We looked at tens of thousands of interviews, and everyone who had done the interviews and what they scored the candidate, and how that person ultimately performed in their job. We found zero relationship. It’s a complete random mess, except for one guy who was highly predictive because he only interviewed people for a very specialized area, where he happened to be the world’s leading expert.”

How is it possible that with tens of thousands of interviews they were unable to establish a relationship between the interview and on the job performance? I argue it’s because this interview technique has no bearing on any actual problem solving in the field. We require a brief analysis on actual problem solving to drive this home.

Actual Problem Solving?

Whiteboard problem solving has two critical shortcomings: it’s purely static, and they have no gradations of success. They don’t change over time and the problem is either solved or it isn’t. Problem solving, especially as far as the literature is concerned, is broken down into two major varieties: static and dynamic. If all the information necessary to solve the problem is available from the outset, and you’re only applying previous knowledge, then by definition it’s a static problem. In fact, even the experts writing the measurement and assessment tools have stated that (1) both pencil and paper are insufficient for dynamic problem solving analysis, and (2) that domain- specific knowledge can actually harm results (different pre-existing mental models)[die-bonn]. A serious impedance mismatch is in play- what nearly every employer is actively seeking is dynamic problem solving capability. The distinction is explained here in “The Assessment of Problem-Solving Competencies”:

In the first case [static], all problem-related information is available upfront, and the problem situation does not change over time. In the case of dynamic problems, the problem state changes and develops with time and through the respondents’ actions. So actually, dynamic problems are much closer to typical everyday-like problem situations – in a dynamic problem situation some aspects of the problem may aggravate over time whereas others may simply disappear. Static problems are suitable for measuring analytical problem- solving skills; dynamic problems tap a mixture of analytical, complex and dynamic problem-solving abilities.

That latter half sounds much, much closer to how a software engineer actually spends the day.

Onward to the flaw of its black and white nature. The candidate either does, or does not solve the problem. In wrap-ups and feedback sessions it’s quite common to see comments extrapolating the speed at which an individual solves a problem as an indicator of their overall problem solving capability. This relationship is both unfounded and unreplicable. Secondarily, static problem solving recognizes at least four distinct levels of capability [Reeff et al., 2005, p. 202]:

- Content-related reasoning : Achieving limited tasks based on practical reasoning.

- Evaluating : Rudimentary systematic reasoning. “Problems at this level are characterized by well-defined, one-dimensional goals; they ask for the evaluation of certain alternatives with regard to transparent, explicitly stated constraints. At this level, people use concrete logical operations.”[die-bonn]

- Ordering/integrating : “…people will be able to use formal operations (e.g. ordering) to integrate multidimensional or ill-defined goals, and to cope with non-transparent or multiple dependent constraints”[die-bonn]

- Critical Thinking : “At the final and highest level of competency, people are capable of grasping a system of problem states and possible solutions as a whole. Thus, the consistency of certain criteria, the dependency among multiple sequences of actions and other “meta-features” of a problem situation may be considered systematically. Also, at this stage people are able to explain how and why they arrived at a certain solution. This level of problem-solving competency requires a kind of critical thinking and a certain amount of meta-cognition.”[die-bonn]

Take special notice of the wording in levels 3 and 4: multidimensional, ill- defined, non-transparent, multiple dependent constraints, multiple sequences, meta-cognition. At best these problems analyze a combination of early computer science classes with static capabilities at no higher level than ‘evaluating’ competency. The skill ceiling is simply too constrained to be diagnostic. It’s not indicative of real world problems, and it’s not even capable of fully measuring the thing it ostensibly is supposed to. Dynamic problem solving as an alternative will be demonstrated in the better options below, but first, let’s finish wrapping up the bad options.

Whiteboard coding

This is as ubiquitous as “problem solving” but doesn’t require anywhere near the level of analysis. Nobody codes this way. No one. At best it’s a “because we’ve always done at this way,” and at worst it’s an intimidation tactic.

A programming language is a directly measurable piece of knowledge. Actual programming is a directly observable skill.

There’s no reason to have someone write code by hand on a whiteboard. Expecting syntactic and lexical correctness in this circumstance is veiled hostility. Tens of thousands of hours have been poured into the tooling surrounding software development to make the process less error-prone, easier, and more efficient. If someone is a career Java developer and used to having IntelliJ, then let that person use IntelliJ . The skill of programming is inescapably connected to its ecosystem of tooling.

Architectural Surprise

I should start this with I actually like architectural interviews. These usually involve drawing numerous block diagrams on a board and explaining how pieces of a more complex system fit together. What I don’t like are architectural interviews where the system being architected is previously unknown to the candidate. “Build a thing you’ve never built at a ridiculously high level in front of strangers with only a cursory technical feedback loop.”

Theoretically this is because the interviewer wants to see how the interviewee approaches something novel and apply architectural skills in a vacuum. The problem is it just doesn’t work this way in reality. If you were building something new it would be done over months to years and involve tremendous amount of research and input from the business, other engineers, planners, etc. It’s a very convoluted feedback loop. In fact, nearly every large, complicated system I’ve ever worked on has been massively influenced by “soft” factors like how much slack was available on other teams, legal & regulatory frameworks, hardware constraints, etc.

Toward A Better Technical Interview

So what does a good interview look like? A good interview should allow the candidate to showcase their knowledge, skills, and experience in the best light possible (which will also expose competencies) and allow you to compare it to your actual needs. No one should leave the interview with an ambiguous feeling. It absolutely requires a degree of tailoring to the experience level of the candidate. Calibrate success criteria around knowledge and skills accordingly (see section on bias), make sure the interviewers know it, and especially, make sure the candidate knows it. Nobody likes walking in blind.

Assessing Knowledge and Skills

Knowledge assessment is made fantastically more difficult than it needs to be. Literally talk to the candidate. There’s no need to game the information, hit them with a surprise, or suss it out by proxy. Well-established rubrics of understanding exist. Apply them as consistently as possible. If you need a starting place, albeit a pedantic one, I’d recommend Bloom’s Taxonomy. Here’s an example of the six levels of understanding for a job that requires some degree of Linux package management knowledge:

- Remembering : Being able to remember elementary facts in a category. “Name a Linux package manager.”

- Comprehending : Compare two elements at a high level. “Compare CentOS’s to Ubuntu’s.”

- Applying : Applying previous knowledge to a new piece of external information. “Would CentOS’s package management make sense given this rollout strategy?”

- Analyzing : Break information into component parts and determine interior relationships. “Compare and contrast modern Linux package management. Provide specifics.”

- Synthesizing : Building a structure from diverse patterns and putting parts together to form a whole. “How would you propose managing a package release system for a diverse fleet of Linux systems?”

- Evaluating : Defending opinions by making a judgement of internal evidence combined with external criteria. “What package management + release system would you recommend based on our needs.”

An example interview that I find particularly useful is asking a candidate to draw the architecture of a system they worked on or built (This double-dips as a skill question!). With that drawing in place, and focusing on your particular hiring needs, begin going through this sort of taxonomy approach about how much detail the candidate can provide about any specific piece.

What factors lead to this choice? You used JSON, can you think of other alternatives that would have also worked? Given those alternatives do you believe this one was the best? Knowing what you know now, would you make that same choice again?

The more senior the candidate, the more nuanced and specific the information should be, as well as being cognizant of external softer factors. A junior candidate knows of things and can discuss them, a senior candidate contextualizes, compares, and evaluates under changing criteria. Wash, rinse, repeat for all the skills you need.

Be sure to focus on the interconnectedness of capabilities: it’s possible to bullshit successfully about a single skill, it’s much harder to bullshit about a constellation of related skills. If you aren’t seeing evidence of a particular skill, then ask the candidate directly. Don’t leave the interview believing that if it didn’t come up that they don’t know about it.

Assessing skills + Actual Problem Solving

Seat your candidate in front of a computer and have them use it. All the technical skills we need day-to-day are directly observable. Softer skills can be more difficult to assess, but I find forcing the candidate to engage in communication will surface most of the behavioral problems over the course of a day. There’s no need to come up with some tricky scenario. If git is a required skill, make the candidate use git. It might take more than one problem to cover your bases, but that’s OK too. If you require a burdensome number of skills, maybe reevaluate the role. Stay away from algorithm problems for all the previously stated problems with static, low-ceiling, domain- specific problem solving. I like to think of this guideline this way : you want the complexity to be within the problem, not the solution. The sweet spot for exploring skills, in particular problem solving, is to come up with a fairly generic dynamic problem that incorporates as many other requirements as possible. So what makes a good dynamic problem? Let ‘s circle back to the promised discussion of dynamic problems.

Dynamic Problem Solving and My New Favorite Questions

Dynamic problem solving has two phases [die-bonn]:

- Knowledge acquisition

- Knowledge application

The problem should begin with a phase of learning how a system works, and then based upon that understanding, manipulate a set of exogenous variables the user can control to get a set of endogenous variables to a goal state. It’s couched in fancy language, but this is about the most perfect academic explanation of debugging I’ve ever heard. Consider this frequent refrain:

Huh. That’s weird. We deployed my code to staging but it’s behaving differently than on my laptop. Now I’m going to spend the afternoon figuring out what’s different. I know the framework makes some environmental choices, but I thought those were accounted for. Is the problem in my code, the library code, my configuration, or something in the environment?

This combines knowledge acquisition (what’s going on?), with exogenous variables (my code + configuration), endogenous variables (the environment + framework), and the potentially extraordinarily complicated hidden relationships between them.

The best example of how to simulate this without any computer domain knowledge at all in the literature is actually so brilliant I’m going to suggest its usage here:

Set the temperature and humidity of a pretend room based on three unlabeled sliders. Every time you make a change the graphs for temperature and humidity will be updated for a given time t. Make the room comfortable and explain the relationship between each slider and the room.

The language is very straightforward, a problem we’ve all dealt with, and relies on no special information. It’s essentially debugging the room temperature. Behind the scenes though this is a highly tunable and randomly generable problem. Consider this less-showy incarnation of a similar example from the DYNAMIS computer-based assessment [Blech].

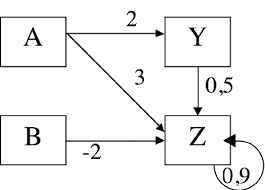

DYNAMIS example network

DYNAMIS example network

The user is in control of A and B, and the user can see the output of Y and Z. None of the edges connecting the variables or their values are known. In order to get Y and Z into a goal state the user would have to uncover the following relationship types:

- Direct simple effect: B has a direct impact on Z.

- Multiple effect: A influences Y and Z.

- Multiple dependency: Z can be influenced by both A and B.

- Eigendynamics: Z can modify itself.

- Side effects: Y can modify Z.

Here’s why I love this problem: even if you’ve solved one instance of this, it won’t help you with another. The complexity is embedded within the problem, not the answer. This is much more like a game that you and a candidate can pair on which also helps prevent interviewer fatigue. Move it behind an API server, and build a client to interact with it. Build more tools if you think it will help. If you solve an easy instance, generate a new one and do that one. The problem is essentially the same as a generic differential equation solver, so the expectation definitely isn’t on finding a working strategy, but rather by making it usable for a human and assessing needs. If the candidate is fully prepped on the problem, then great, turn up the difficulty! If they display expert level ability to tease apart variables, can code a client + assistive tooling, and have all the requisite knowledge from above then you should have very strong positive signals.

Additionally, numerous options exist to make this easier or harder:

- Number of variables

- Number of relations

- Random components: Hidden variables influencing endogenous variables in non-systemic ways. (Have you ever had a system experiencing heat related failure? It’s a nightmare the first time you encounter it.)

- Delayed effect: Think propagation delay in relationships.

- Time windowing + no reset: Limit the number of steps the user has or don’t allow the system to be reset.

- Transparency: How much of the system do you reveal at the beginning.

Known Architecture

I’m only going to modify this slightly from the version I dislike. I prefer having candidates draw the architecture of a system they know well. I believe this is significantly more instructive than a surprise choice.

- It uncovers the extend to which they can explain something they worked on daily for an extended period of time.

- You can ask “what was the hardest part of this for you?” You can explore whether it was a based on technical difficulty or organizational difficulty. Organizational difficulty is a fantastic springboard for a lot of behavioral questions. This also makes it easy to lead into “What would you do differently without that constraint?”

- People fall back on what they know in rough situations or under duress. This is particularly important if your organization hasn’t quite dialed in its mentorship and the candidate will be flying solo. If you can find out a large system someone is proud of, you can get a window into what they will likely build again given free reign and tight deadlines. But like I said above, just ask too: “Would you use these patterns again if given the opportunity?”

Conclusions

There are no panaceas for technical interviewing. There isn’t a perfect solution and nothing will work for 100% of all companies all the time. That being said, I believe we can definitely do far better. The key takeaways

- Understand the role you’re hiring for by making sure the knowledge and skills are both enumerable and measurable.

- Unless you’re in the middle of an architectural discussion or the candidate needs a diagram to clarify, no whiteboards.

- Nothing beats having the candidate use a real computer.

- Minimize the surprises and let the candidate play to their strengths- you would want this if they were an employee anyway.

- Simple problems with complex solutions will bore your interviewers and they aren’t indicative anyway.

- Favor dynamic over static problems.

Footnote A: On Bias

I would be remiss to write this much on hiring without offering a note on bias. Much has been written elsewhere and I absolutely believe that marginalized groups are treated unfairly in the hiring process. My minor contribution to this discussion is to treat knowledge and skills as things a person has, and not the place where they came from. I believe that the industry engages in classist, ageist, college-centric hiring practices to the detriment of us all. Hire people that know what they’re doing and stop worrying about Big-O notation.

Secondly, much of the literature clearly states that both knowledge (requires opportunity to learn) and skills requires (opportunity to apply knowledge). The result is that both of these are social constructs. You will always have the inherent biases of the interviewers and your corporate culture to contend with which is why fair grading and honesty are critical.

This is honestly worthy of its own field of study and I welcome additional notes.

Footnote B: On “CS Fundamentals”

Can we please just stop using this phrase? I graduated from an accredited CS program and the coursework included: discrete math, calculus, linear algebra, programming, theory of language, theory of computation, algorithms 1, algorithms 2, advanced algorithms, digital signal processing, operating systems, computer architecture, networks, software engineering, and the list goes on for a while. When we say “CS fundamentals” we almost always end up targeting about a week’s worth of lectures somewhere in a freshman/sophomore year program. Especially on algorithms you shouldn’t be implementing. Calling this tiny amount of information “CS fundamentals” is phenomenally disingenuous.

Bibliography

Hartle, F. (1995) How to Re-engineer your Performance Management Process, London: Kogan Page.

Blech, Christine & Funke, Joachim. (2005). Dynamis review: An overview about applications of the Dynamis approach in cognitive psychology https://www.researchgate.net/publication/228353257_Dynamis_review_An_overview_about_applications_of_the_Dynamis_approach_in_cognitive_psychology

https://www.die-bonn.de/esprid/dokumente/doc-2006/reeff06_01.pdf The Assessment of Problem-Solving Competencies

In Head-Hunting, Big Data May Not Be Such a Big Deal

_We’ve done some interesting things to figure out how many job candidates we

should be interviewing for each position

…_www.nytimes.com

Reeff, J.-P./Zabal, A./Klieme, E. (2005): ALL Problem Solving Framework. In: Murray, T. S./Clermont, Y./Binkley, M. (2005): International Adult Literacy Survey. Measuring Adult Literacy and Life Skills: New Frameworks for Assessment. Ottawa

Bloom’s Taxonomy on Wikipedia https://en.wikipedia.org/wiki/Bloom%27s_taxonomy#The_cognitive_domain_(knowledge- based)